Again thank you Anoop for the scientific explanation.

Good clearly written article as always

Studies are conflicting so I don’t believe in scientific studies

October 11 2013

The is one of the most common arguments against an evidence based approach or scientific approach. I have lost count of the times I have read comments on facebook and forums that reads ‘one study might say it helps while another might say it doesn’t’ or ‘you can always find a study which agrees with your position’. Hence a scientific discussion based on studies is worthless.

This is one of the major criticisms of an evidence based approach. and none of the articles online have covered it well enough. I have read and heard people who are supposed to be experts in scientific approach throw up their hands in despair when they see conflicting studies on the same topic.

So what is wrong with this argument?

Comparing apples & oranges: The most obvious reason is that there are actual differences in the studies though they seemingly appear very similar. The major reasons could be due to the population studied, the intervention/treatment, the outcome measure, the length of the time, and bias. For example, you could have two studies with the same weight training program, muscle mass as the common outcome measure, same length of the training program and still have conflicting results. Why? It is because one study used trained subjects and the other study used untrained. The untrained obviously made a big improvement, while the trained didn’t make much of an improvement as expected.

The point is event though the study may appear similar, it is different in certain fundamental aspects and hence cannot be grouped together in the first place. It is as stupid as saying apples and oranges are conflicting!

”

”

Similar Studies Conflict: When similar studies do conflict, it is largely due the reason that studies didn’t have enough subjects. In other words, the treatment is effective, but it wasn’t statistically significant due to a low sample size. We knew this long back and we also have an elegant solution to this problem called meta-analysis. In simple terms, meta-analysis is the systematic process of combining small studies to make one big study with a large number of subjects. In fact, this is the exact reason why meta-analysis has the greatest validity and occupies the top of the pyramid in the hierarchy of evidence in an Evidence based approach.

So people who cherry pick favorable studies to support their statement or who argues that studies are conflicting hence studies are worthless are both wrong and clearly unaware of the basics of an evidence-based approach.

Blah blah,.. can you give an example?

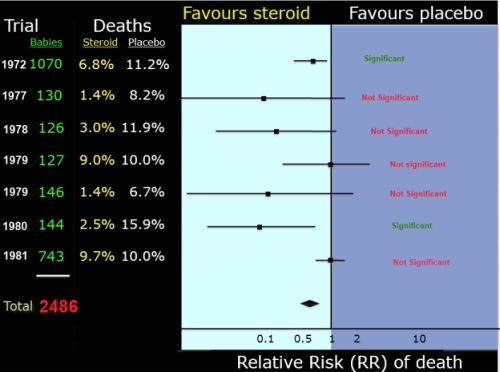

Here is a very famous meta-analysis which looked to see if a short, inexpensive treatment of corticosteroids given to pregnant women will reduce babies dying from the complications of immaturity. The results of this meta-analysis are shown in the figure and is called a forest plot.

Now what the heck does all those lines mean?

- Each horizontal line represents each study. The year the study conducted is shown on the left. The shorter the line, the greater our confidence in the results

- The vertical line is the line of ‘no difference’. It means if the horizontal line touches the vertical line, the result of the study is not statistically significant or there is no benefit of the treatment

- As you can see only 2 studies ( in green) are significant, whereas 5 studies ( in red) are not significant. In other words 2 studies shows there is a benefit while 5 studies shows there is no benefit. If you just go by that, the studies are indeed conflicting and corticosteroid treatment is clearly not effective!

Based on this ‘inconclusive’ evidence, most obstetricians never gave corticosteroids therapy. In 1989, finally, a meta-analysis was conducted. As shown in the figure, when the same, previous studies were combined to get a total of 2486 subjects, there was a very clear benefit of corticosteroid treatment. The position of the diamond to the left favoring the steroid treatment shows that the odds of babies dying prematurely is reduced by 30 to 50 percent with steroid treatment!! Unfortunately, it took another decade and another 10 studies and another meta-analysis for the treatment to be routinely practiced by obstetricians. So we were late to do a meta-analysis, and when one was done, we didn’t bother to look it up and still kept wasting money on new studies. And what does that mean?

That just simply means that tens of thousands of babies probably suffered and probably died needlessly!

In fact, the story was so powerful that they adopted the forest plot of the corticosteroid meta-analysis as the logo for the Cochrane Group. The Cochrane Collaboration is an independent nonprofit organization represented by more than 130 countries. Their primary role is to publish high quality systematic reviews and met analysis in timely manner in the field of health care so that tragedies like these are never ever repeated. If you have questions about healthcare or medicine, this is where you should look first.

The corticosteroid meta-analysis example is an unbelievable story of the power of evidence-based approach, or more specifically the story of meta-analysis, in saving tens of thousands of lives.

Conclusion

- Studies that do appear similar, may be different in some fundamental aspects and shouldn’t be compared.

- Instead of cherry picking individual studies to fit your conclusion, look at the totality of the evidence.

- Studies that are indeed similar and conflicting should be grouped together using a systematic approach called meta-analysis or systematic reviews.

Related Articles

Anoop | Mon October 14, 2013

Thanks Sully! This is a very very important article. I hope more people could read it and understand the importance.

The corticosteroid story is just unbelievable and is an amazing example of the power of a single meta-analysis.

Some of the big journals,like Lancet now mandates that a new study SHOULD placed the study in the totality of the evidence. This is going to be the trend soon in other journals too.

Great info Anoop, I don’t know how many times I have encountered this “argument”. Some relatively recent discussions lead me to write a similar post (http://doingspeed.com/uncategorized/you-can-find-evidence-to-support-any-view/)

Thanks for keeping the focus on Evidence-based practice

Nick Ng | Mon October 21, 2013

Awesome stuff, Anoop. As a freelance writer and blogger, my editors prefer to use evidence-based materials to address issues and answer questions. In the last year that I’ve been writing, I realized that some studies are more accurate and less bias than others, even those from Pubmed.

Thanks for teaching us this.

Anoop | Wed October 23, 2013

Thanks Jeff!!

Thanks Nick! Please share with your readers.

I write about biceps curl, I get 100’s of likes. I write about saving 1000 of lives, I get a few likes 😊

Great articles Anoop!

Just started in the exercise physiology field and your articles have really helped. Thanks buddy.

MetroEast Beast | Thu December 05, 2013

As long as a study is detailed to the extent that another research could repeat the results it’s fine. If the findings are different, well that’s science! The later researchers should provide detail into the issues with the first study.

Good analysis! And I do think sometimes studies are confilcting, but I believe in science as always. Every confict will bring a new progress, just as the GM food, it’s obviously the conflict is never end. When a new evidence was found for it is bad for environment and health, there always are another evidence to prove this is wrong.

Non Cargo-Cult-Junk "Scientist" | Fri April 01, 2016

The problem is every field wants to call itself “science” but many simply are not. This does NOT mean it has no value, but its claims must be considered accordingly to its standards of intellectual rigor. Not all questions are practical to answer in a very rigorous fashion. This is not a fault of the practitioners, but we must consider how much weight can actually be placed upon their claims. Physics, chemistry, earth/planetary science, astronomy, and most of biology are sciences. Psychology, sociology, economics, political “science”, anthropology, archeology, nutrition department nutrition, kinesiology, exercise “science”, physical therapy, and a depressing amount of medicine are not sciences.

If you’re conducting studies instead of experiments, sorry you’re not a scientist. If you rely on achieving a 0.05 p value significance, sorry you’re not a scientist. That’s not to say these practices don’t have their place, but one must be honest about what they’re doing. Just because you have peer review does not mean you’re automatically engaged in science. Just because you collect data does not mean you’re automatically engaged in science. Just because you have a PhD does not mean you’re automatically engaged in science. Just because you make predictions and then test them does not mean you’re automatically engaged in science. Science is not as simple as it’s made out to be in elementary school. There is no scientific method, but what there is, is a standard of intellectual rigor and adherence to a careful blend of empiricism and rationalism. If you can’t meet this level of rigor, even for perfectly good reasons, you’re not doing science and your conclusions must be weighed accordingly. Sorry.

Anoop | Sat April 02, 2016

Hi,

First, psychology, sociology aren’t considered hard science because the measurements are qualitative and not quantitative. Measurements in exercise science and nutrition outcomes are quantifiable and repeatable. So bracketing it together with psychology and economics is not right.

Second, like it or not, statistical significance determines the fate of almost all studies. It is even true for physics but they often demand a much stringent value than p < .05. In particle physics, it is p < .0003.

Third, agreed about scientific rigor. That s one aspect that bothers me a lot in science. And that includes the bio-medical field which you called as “science”. A recent paper showed 96% of the hypothesis in the biomedical field to be true so far! Should we still call it “science”?